Learning Universal Policies via Text-Guided Video Generation

Abstract

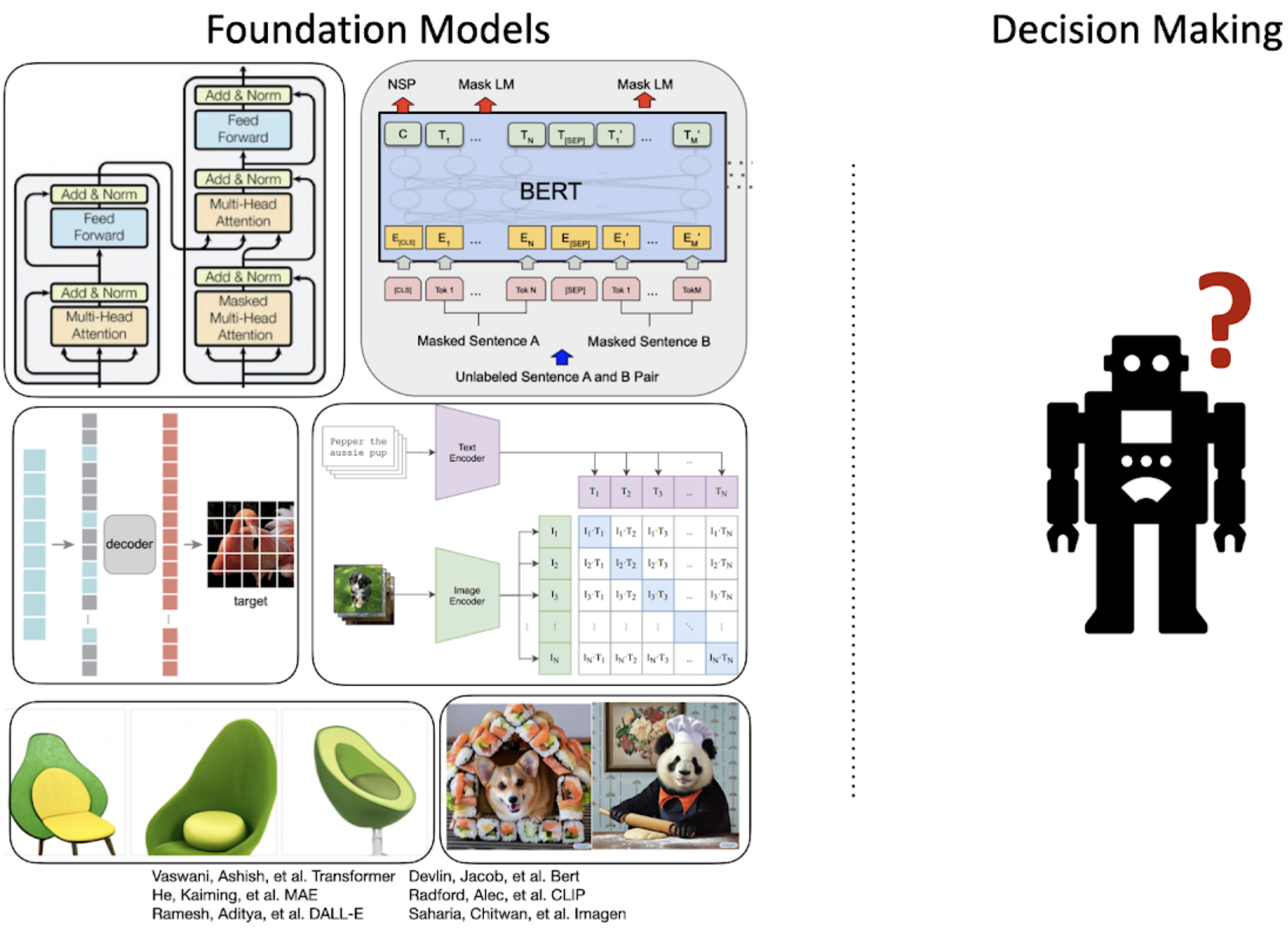

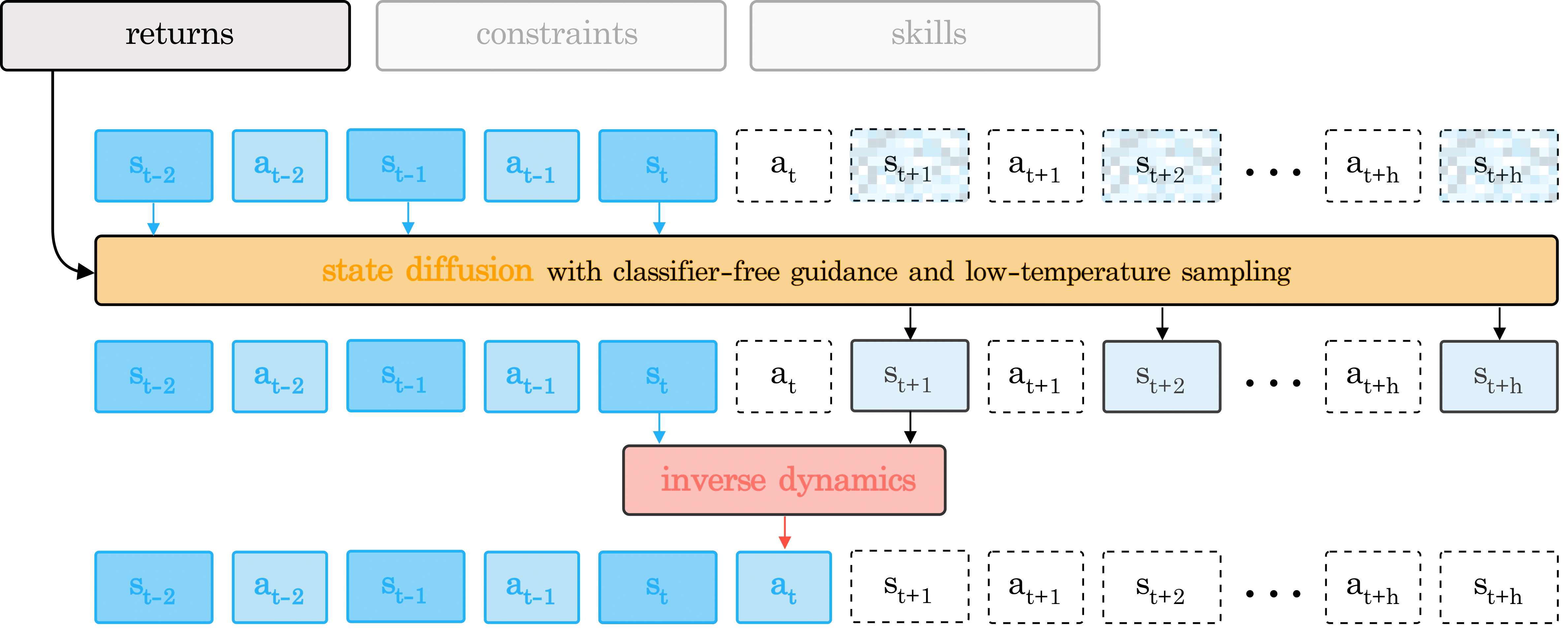

A goal of artificial intelligence is to construct a agent that can solve a wide variety of tasks. Recent progress in text-guided image synthesis has yielded models with impressive abilities and rich combinatorial generalization of complex novel images across different domains. Motivated by this success, we investigate whether we may use such tools to construct more general-purpose agents. Specifically, we cast the sequential decision making problem as a text-conditioned video generation problem, where, given a text-encoded specification of a desired goal, a planner synthesizes a set of future frames depicting its planned actions in the future, and the actions will be extracted from the generated video. By leveraging text as our underlying goal specification, we are able to naturally combinatorially generalize to unseen goals. Our policy-as-video formulation can further represent environments with different state and action spaces in a unified space of images, enabling learning and generalization across a wide range of robotic manipulation tasks. Finally, by leveraging pretrained language embeddings and widely available videos on the internet, our formulation enables knowledge transfer through predicting highly realistic video plans of real robots.

Combinatorial Generalization

Below, we illustrate generated videos on unseen combinatorial combinations of goals. Our approach is able to synthesize a diverse set of different behaviors which satisfy unseen language subgoals.

Multitask Learning

Below, we illustrate generated videos on unseen tasks. Our approach is further able to synthesize a diverse set of different behaviors which satisfy unseen language tasks.

Real Robot Videos

Below, we further illustrate generated videos given language instructions on unseen real images. Our approach is able to synthesize a diverse set of different behaviors which satisfy language instructions.

Our approach is further able to generate videos of robot behaviors given unseen natural language instructions. Note that pretraining on a large online dataset of text/videos significantly helps generalization to unseen natural language queries.

Source Code

Most of the experiments in the paper are run at Google and the source code cannot be released. You can use this github repo for a open-source codebase for training UniPi.

Citation

@article{du2023learning,

title={Learning Universal Policies via Text-Guided Video Generation},

author={Du, Yilun and Yang, Mengjiao and Dai, Bo and Dai, Hanjun and Nachum, Ofir and Tenenbaum, Joshua B and Schuurmans, Dale and Abbeel, Pieter},

journal={arXiv e-prints},

pages={arXiv--2302},

year={2023}

}